Optimisation et Simulation Monte Carlo : Entrelacements

Funded by Fondation Simone et Cino Del Duca, 2019. From January 2020 to December 2023.

P.I.: Gersende Fort.

Scope : When Optimization and Monte Carlo sampling intertwin. The goal of this project is twofold

- How to combine Optimization techniques and Monte Carlo sampling in order to solve challenging optimization problems ?

- How to combine Monte Carlo sampling and Optimization techniques in order to improve the efficiency of the samplers ?

These two problems are motivated by computational problems in Statistics and Statistical Learning.

Two main directions of research are followed:

- Stochastic Majorization-Minorization algorithms for large scale learning: when Monte Carlo sampling is used to tackle the intractabilities of the majorizing step, possibly in the context of Federated learning.

- Member of the team: Eric Moulines (CMAP, Ecole Polytechnique), Hoi-To Wai (Chinese University of Hong-Kong), Aymeric Dieuleveut (CMAP, Ecole Polytechnique), Geneviève Robin (LAMME, CNRS), Pierre Gach (IMT, Université Toulouse III), Florence Forbes (INRIA Grenoble), Hien Duy Nguyen (Univ. Queensland, Australia).

- Codes: github gfort-lab

- Efficient Monte Carlo sampling for a Bayesian analysis of the Covid-19 reproduction number: when optimization techniques improve on classical MCMC samplers for non-smooth concave target log-densities thus allowing the estimation of credibility regions for the a posteriori distribution of the Covid-19 reproduction number.

- Member of the team: Patrice Abry (LP-ENSL, CNRS), Juliette Chevallier (IMT, INSA), Barbara Pascal (CRIStAL, CNRS), Nelly Pustelnik (LP-ENSL, CNRS), Hugo Artigas (Ecole Polytechnique).

- Codes: github gfort-lab

- Webpage: see below for France from January 2022.

Publications

- A. Dieuleveut, G. Fort, E. Moulines and H.-T. Wai. Stochastic Approximation beyong Gradient for Signal Processing and Machine Learning. hal-03979922. IEEE Trans Signal Processing; 71:3117-3148, 2023. paper

- G. Fort and E. Moulines. Stochastic Variable Metric Proximal Gradient with variance reduction for non-convex composite optimization. hal-03781216. Accepted for publication in Statistics and Computing, March 2023. paper

- P. Abry, G. Fort, B. Pascal and N. Pusteknik. Covid19 Reproduction Number: Credibility Intervals by Blockwise Proximal Monte Carlo Samplers. hal-03611079. IEEE Trans. Signal Processing, 71:888-900, 2023. paper

- A. Dieuleveut, G. Fort, E. Moulines, G. Robin. Federated Expectation Maximization with heterogeneity mitigation and variance reduction. hal-03333516. In Conference Proceedings NeurIPS, Vol 33, p.16972-16982, 2021. paper

- G. Fort, E. Moulines, P. Gach. Fast Incremental Expectation Maximization for non-convex finite-sum optimization: non asymptotic convergence bounds. hal-02617725. Statistics and Computing, 31(48):24 pages, 2021. paper

- G. Fort, E. Moulines, H.T. Wai. A Stochastic Path Integrated Differential Estimator Expectation Maximization Algorithm. hal-03029700. In Conference Proceedings NeurIPS, Vol 33, p.16972-16982, 2020. paper

- P. Abry, J. Chevallier, G. Fort and B. Pascal. Pandemic Intensity Estimation From Stochastic Approximation-Based Algorithms. hal-04174245. Accepted for publication in CAMSAP 2023 conference proceedings. paper

- P. Abry, G. Fort, B. Pascal and N. Pustelnik. Credibility intervals for the reproduction number of the Covid-19 pandemic using Proximal Lanvevin samplers. hal-03902144. Accepted for publication in the EUSIPCO 2023 proceedings. paper

- H. Artigas, B. Pascal, G. Fort, P. Abry and N. Pustelnik. Credibility Interval Design for Covid19 Reproduction Number from nonsmooth Langevin-type Monte Carlo sampling. hal-03371837. Accepted for publication in EUSIPCO 2022 conference proceedings. paper

- P. Abry, G. Fort, B. Pascal and N. Pustelnik. Temporal Evolution of the Covid19 pandemic reproduction number: Estimations from Proximal optimization to Monte Carlo sampling. hal-03565440. Accepted for publication in EMBC 2022 conference proceedings. paper

- H. Duy Nguyen, F. Forbes, G. Fort and O. Cappé. An online Minorization-Maximization algorithm. hal-03542180. Accepted for publication in IFCS 2022 proceedings. paper

- G. Fort and E. Moulines. The Perturbed Prox-Preconditioned SPIDER algorithm for EM-based large scale learning. hal-03183775. Accepted to IEEE Statistical Signal Processing Workshop (SSP 2021). paper

- G. Fort and E. Moulines. The Perturbed Prox-Preconditioned SPIDER algorithm: non-asymptotic convergence bounds. hal-03183775. Accepted to IEEE Statistical Signal Processing Workshop (SSP 2021). paper

- G. Fort, E. Moulines, H.-T. Wai. Geom-SPIDER-EM: Faster Variance Reduced Stochastic Expectation Maximization for Nonconvex Finite-Sum Optimization. hal-03021394. 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2021) pp:3135-3139. paper

- P. Abry, G. Fort, B. Pascal and N. Pustelnik. Estimation et intervalles de crédibilité pour le taux de reproduction de la Covid19 par échantillonnage Monte Carlo Langevin proximal. hal-03611891. Accepted for publication in GRETSI 2022 conference proceedings. paper

Invited communications in international or national conferences

- (plenary conference) TBA. Monte Carlo and Quasi-Monte Carlo methods in Scientific Computing, Waterloo, Canada, August 2024.

- (plenary conference) TBA. French-German-Spanish conference on Optimization, Gijon, Spain, June 2024.

- (plenary conference) L’Approximation Stochastique au-delà du Gradient. Colloque GRETSI, Grenoble, France, August 2023

- When Markov chains control Monte Carlo sampling. Conference Processus markoviens, semi-markoviens et leurs applications, Montpellier, France, June 2023.

- Stochastic Variable Metric Forward-Backward with variance reduction for non-convex optimization. Workshop Learning and Optimization in Luminy, CIRM, Luminy, France, October 2022.

- (plenary conference) Algorithmes Majoration-Minoration stochastiques pour l’Apprentissage Statistique grande échelle. 53èmes Journées de Statistique de la SFDS, Lyon, France, June 2022.

- (plenary conference) Stochastic Majorize-Minimization algorithms for large scale learning. Journées de Statistique du Sud, Avignon, France, May 2022.

- Federated Expectation Maximization with heterogeneity mitigation and variance reduction. Workshop Current Developments in MCMC methods, Warsaw, Poland, December 2021.

- A Variance Reduced Expectation Maximization algorithm for finite-sum optimization. Conference in Numerical Probability, in honour of Gilles Pagès, Paris, France, May 2021.

- Fast Incremental Expectation Maximization algorithm: how many iterations for an \epsilon-stationary point ? Conference Optimization for Machine Learning, CIRM, Luminy, France, March 2020.

Contributed communications in international or national conferences

- Pandemic Intensity Estimation From Stochastic Approximation-Based Algorithms. 2023 INternational Workshop on Computational Advances in Multi-Sensor Adaptive Processing. (CAMSAP), Herradura, Costa Rica, December 2023.

- Credibility intervals for the reproduction number of the Covid-19 pandemic using Proximal Lanvevin samplers. 31th European Signal Processing Conference (EUSIPCO), Helsinki, Finland, August 2023.

- Stochastic Approximation beyond Gradient. European Meeting of Statisticians, Warsaw, Poland, July 2023.

- Credibility Interval Design for Covid19 Reproduction Number from nonsmooth Langevin-type Monte Carlo sampling. 30th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, August 2022.

- Temporal Evolution of the Covid19 pandemic reproduction number: Estimations from Proximal optimization to Monte Carlo sampling. 44-th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Glascow, United Kingdom, July 2022.

- Federated Expectation Maximization with heterogeneity mitigation and variance reduction, Journée du GDR ISIS « Statistical Learning with missing values, » in visio, December 2021.

- Confidence intervals for Covid-19 Reproduction Number Estimation combining NonSmooth Convex Proximal Optimization and Stochastic Sampling. Conference on Complex Systems, Lyon, France, October 2021.

- The Perturbed Prox-Preconditioned SPIDER algorithm for EM-based large scale learning. IEEE Statistical Signal Processing Workshop (SSP), in visio, July 2021.

- GEOM-SPIDER-EM: faster variance reduced Stochastic Expectation Maximization for Nonconvex Finite-Sum Optimization. International Conference on Acoustics, Speech and Signal Processing (ICASSP); in visio, June 2021.

- A Stochastic Path-Integrated Differential EstimatoR EM algorithm. Neural Information Processing Systems (NeurIPS); in visio, December 2020.

Invited communications in seminars of academic research labs, part of national or international Universities

- TBA. Séminaire Math-Bio-Santé de l’IMT, Toulouse, France; June 2024.

- TBA. Séminaire de l’équipe DATA du LJK, Grenoble, France; January 2024.

- Stochastic Approximation Beyond Gradient. Colloque de l’Institut de Santé Globale, Geneve, Switzerland; October 2023.

- Stochastic Approximation Beyond Gradient. OptAzur: Optimization in French Riviera, Antibes, France; October 2023 .

- Stochastic Variable Metric Forward-Backward with variance reduction. Séminaire Parisien d’Optimisation, Paris, France; April 2023.

- Credibility Intervals for Covid19 reproduction number from Nonsmooth Langevin-type Monte Carlo sampling. Séminaire du Maxwell Institute for Maxthematical Sciences, Heriot-Watt University; Edimbourg, Royaume-Uni; October 2022.

- Variance reduced Majorize-Minimization algorithms for large scale learning. Séminaire du Dpt de Mathématiques et Physique de l’Univ. de Queensland; Brisbane, Australia; April 2022.

- The Expectation Maximization algorithm for Federated learning. Séminaire du groupe GAIA, GiPSA-Lab; Grenoble, France; January 2022.

- Algorithme Expectation Maximization avec réduction de variance pour l’optimisation de sommes finies. Séminaire Statistique et Optimisation de l’IMT; Toulouse, France; November 2021.

- A variance reduced Expectation Maximization algorithm for finite-sum optimization. Séminaire INRAE MAiAGE; Jouy-en-Josas, France; September 2021.

- Optimisation et Méthodes de Monte Carlo : entrelacements. « Petit Séminaire » de l’équipe Statistique et Optimisation de l’IMT; Toulouse, France; April 2020.

—————————–

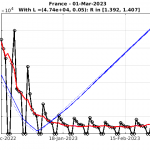

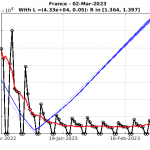

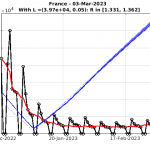

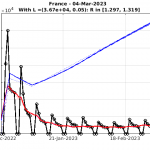

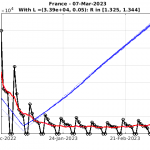

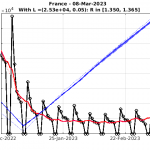

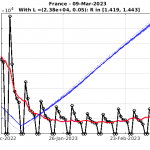

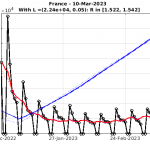

In collaboration with P. Abry (LP-IXXI, CNRS), B. Pascal (CRIStAL puis LS2N, CNRS) and N. Pustelnik (LP-IXXI, CNRS).

Here are examples for France; the estimates are based on the daily number of new infections published by Johns Hopkins University (data from Santé Publique France).

top: number of new infections (black), estimated expectation (blue) and outlierless data (red)

middle: maximum a posteriori (blue) and median a posteriori (red)

bottom: 95-% credibility region translated by the median (displayed: the fluctuations w.r.t. the median)

Beware ! the Bayesian criterion is modified every day.

France: February 2023 January 2023 December 2022 November 2022 October 2022 September 2022 August 2022 July 2022 June 2022 May 2022 April 2022 March 2022 February 2022 January 2022

Serbia: from June 22 2022 to September 1st 2022.

March 2023, France